There are three buzzwords that, if we had our way, would be stricken completely from the world: "cloud," "the Internet of Things," and "big data." Each of them was coined in an attempt to elegantly capture a complex concept, and each of them fails miserably. "Cloud" is a wreck of a term that has no fixed definition (with the closest usually being "someone else’s servers"); "Internet of Things" is so terrible and uninformative that its usage should be punishable by death; and then there’s "big data," which doesn’t appear to actually mean anything.

We’re going to focus on that last term here, because there’s actually a fascinating concept behind the opaque and stupid buzzword. On the surface, "big data" sounds like it ought to have something to do with, say, storing tremendous amounts of data. Frankly it does, but that’s only part of the picture. Wikipedia has an extremely long, extremely thorough (and, overly complex) breakdown of the term, but without reading for two hours, big data as a buzzword refers to the entire process of gathering and storing tremendous amounts of data, then applying tremendous amounts of computing power and advanced algorithms to the data in order to pick out trends and connect dots that would otherwise be invisible and un-connectable within the mass.

For an even simpler dinner party definition: when someone says "big data," they’re talking about using computers to find trends in enormous collections of information—trends that people can’t pick out because there’s too much data for humans to sift through.

Old concept, new word?

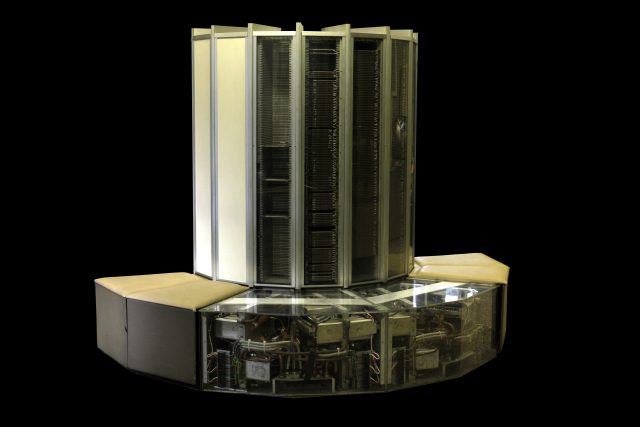

Computerized analysis of piles and piles of data isn’t new, of course. Doing tedious things with numbers has been the primary function of computers since computers were invented (and long before, back to the time when the phrase "computer" meant "person in the back room who does tedious things with numbers"). The reason why the term "big data" has suddenly come en vogue—other than an effort by marketers at various companies that sell "big data" solutions to other companies—is because it’s really only within the past 10 to 15 years that a confluence of improvements in data processing abilities on both the hardware and the software side made holistic analysis of truly staggering amounts of data possible.

What kind of scale are we talking about here? Usually "big data" means you’re sifting through at least many hundreds of gigabytes using some manner of sophisticated algorithm and usually distributing the load between two or more computers. Big enterprises or scientific concerns can work with sets of data that easily enter into the terabyte or petabyte range—even orders of magnitude larger in extreme cases.

To keep this explanation from getting too deep, there are four aspects of big data that really matter. We’re going to briefly touch on three and then wrap up with a few examples of big data in the real world.

A four-legged stool

First, of course, is the actual collection of whatever data makes up your big data pile. That’s an external concern here—if you’re a cellular provider, for example, you might have hundreds of millions (or more) of customer and device records in some kind of distributed database. If you’re a researcher, you might have an experiment that generates many gigabytes of data points, every hour, that you’re tracking over the course of days or weeks. The actual data is of course important, but how you get it is mostly immaterial. What matters is that you have it and you need to sift it.

The second factor is data storage. You’ve got to have a way to keep your gigabytes or terabytes or petabytes (or exabytes) of data, and keep it in such a way that you’re able to access it randomly, at whatever point you need, with the ability to do useful things to it. This can mean using a distributed file system (something like Gluster) to treat many computers’ drives like they’re part of a single volume; it could mean using external cloud storage like Amazon EBS; it could mean—if you can afford it—using a big expensive storage area network. There are lots of ways to hold lots of data, but you’ve got to have some kind of bucket to dump it into.

You next need some computers to actually do something with all your data—be they racks of servers, rented elastic cloud devices, purpose-built appliances, or whatever. And you probably need a lot of computing power, too. Archimedes’ famous Doric quote applies here: "Give me a place to stand and with a lever I will move the whole world." Replace "lever" with "enough computing power" and you’ve got the idea.

But then there’s that fourth leg—software. To torture the metaphor a bit past the breaking point, if "computing power" stands in for Archimedes’ lever, then "software that can do useful stuff with that computing power" is Archimedes’ place to stand. Though scads of computing power is a requirement, the right software is the secret sauce that makes big data work—the right algorithm can make the difference between a search through your data set that takes an hour versus a search that takes a few seconds.

The where and what

The easiest example of "big data" is one that everyone reading this article is familiar with—Google’s search. It works so quickly and so reliably that it’s rare to spare a thought about what’s going on under the hood, but those search results generated in milliseconds are the result of huge amounts of distributed processing power churning through vast datasets. The hugely oversimplified way it all works is that rather than searching through pages directly, Google keeps an index of words and collections of words that appear on webpages, and it’s that index against which your search terms are applied. It’s far faster to look something up in an index than to scan a whole page.

However, to generate the index, they do have to scan through whole pages. Google used to use a framework called MapReduce for this—parceling the scanning out across a huge number of servers and integrating the results back into an index. MapReduce has long since been retired by Google in favor of more advanced applications that can handle larger and larger data sets.

Even though Google doesn’t use it anymore, MapReduce now forms part of Apache Hadoop, which is an open source big data framework that sees tremendous usage in the real world by many companies and research institutions.

Another easy-to-swallow example of big data can be seen in huge-scale manufacturing. Remember that big data is about finding data needles in data haystacks, and each step in a complex manufacturing process can generate tremendous amounts of data. If you’ve got a huge assembly line which builds components out of parts that themselves each have manufacturing processes behind them, it can be extremely difficult to track problems in completed parts back to assembly errors. There are simply so many compounded variables. However, with enough computing power and the right algorithms, it becomes a much easier problem to tackle—trends that might not be visible to the naked eye (or even to hundreds of naked eyes) can be visualized.

In fact, when we visited GE’s research center in Munich last month, we saw exactly that. Analytics in manufacturing is a big business all on its own. Being able to figure out that, say, a variance in humidity on one day in one manufacturer’s assembly plant caused one set of components to be built slightly out of tolerance which in turn affected some other component a hundred steps down the line—that kind of thing can save tremendous amounts of time and money.One example that got a lot of media attention a few years ago was T-Mobile’s use of big data to suss out patterns in customer cancellations. Among other things, T-Mobile looked at how their subscribers were connected together—who called who, basically—and attempted to figure out each caller’s effective level of influence relative to other callers. They noted that some of their customers could kick off what they called "contagious churn," where one canceling would lead to others canceling. T-Mobile also scrutinized data outside of their billing database, looking at dropped calls and other "non-billable" indicators of customer annoyance. Then they pulled all this together and began focusing efforts on proactively helping out higher-influencing customers—like offering a femtocell to a high-influencing customer who has moved to a new area with bad service to prevent that customer from leaving T-Mobile and taking their circle with them.

The web we weave

Now, when someone turns to you and says, "Hey, I hear you read that A-R-S Technical website—what does 'big data' mean, anyway?" you can face them down with a confident grin and fire back with an answer! It’s all about sorting variables and tracking them, piecing together things that humans can’t. Computers are very good at sifting tremendous quantities of information (with the right software, of course), and that’s the core of big data.

Speaking of tremendous amounts of data: while we’re focusing this week on our trip to Munich and the stories inspired by our visit there, this isn’t the end of our discussion about big data. If you’re intrigued by the topic, you’ll love what we’re doing in a few weeks when Sean Gallagher digs into exactly how GE deals with the data their manufacturing processes generate.

reader comments

59