How to see into the future

Simply sign up to the Life & Arts myFT Digest -- delivered directly to your inbox.

Irving Fisher was once the most famous economist in the world. Some would say he was the greatest economist who ever lived. “Anywhere from a decade to two generations ahead of his time,” opined the first Nobel laureate economist Ragnar Frisch, in the late 1940s, more than half a century after Fisher’s genius first lit up his subject. But while Fisher’s approach to economics is firmly embedded in the modern discipline, many of those who remember him now know just one thing about him: that two weeks before the great Wall Street crash of 1929, Fisher announced, “Stocks have reached what looks like a permanently high plateau.”

In the 1920s, Fisher had two great rivals. One was a British academic: John Maynard Keynes, a rising star and Fisher’s equal as an economic theorist and policy adviser. The other was a commercial competitor, an American like Fisher. Roger Babson was a serial entrepreneur with no serious academic credentials, inspired to sell economic forecasts by the banking crisis of 1907. As Babson and Fisher locked horns over the following quarter-century, they laid the foundations of the modern economic forecasting industry.

Fisher’s rivals fared better than he did. Babson foretold the crash and made a fortune, enough to endow the well-respected Babson College. Keynes was caught out by the crisis but recovered and became rich anyway. Fisher died in poverty, ruined by the failure of his forecasts.

If Fisher and Babson could see the modern forecasting industry, it would have astonished them in its scale, range and hyperactivity. In his acerbic book The Fortune Sellers, former consultant William Sherden reckoned in 1998 that forecasting was a $200bn industry – $300bn in today’s terms – and the bulk of the money was being made in business, economic and financial forecasting.

It is true that forecasting now seems ubiquitous. Data analysts forecast demand for new products, or the impact of a discount or special offer; scenario planners (I used to be one) produce broad-based narratives with the aim of provoking fresh thinking; nowcasters look at Twitter or Google to track epidemics, actual or metaphorical, in real time; intelligence agencies look for clues about where the next geopolitical crisis will emerge; and banks, finance ministries, consultants and international agencies release regular prophecies covering dozens, even hundreds, of macroeconomic variables.

Real breakthroughs have been achieved in certain areas, especially where rich datasets have become available – for example, weather forecasting, online retailing and supply-chain management. Yet when it comes to the headline-grabbing business of geopolitical or macroeconomic forecasting, it is not clear that we are any better at the fundamental task that the industry claims to fulfil – seeing into the future.

So why is forecasting so difficult – and is there hope for improvement? And why did Babson and Keynes prosper while Fisher suffered? What did they understand that Fisher, for all his prodigious talents, did not?

In 1987, a young Canadian-born psychologist, Philip Tetlock, planted a time bomb under the forecasting industry that would not explode for 18 years. Tetlock had been trying to figure out what, if anything, the social sciences could contribute to the fundamental problem of the day, which was preventing a nuclear apocalypse. He soon found himself frustrated: frustrated by the fact that the leading political scientists, Sovietologists, historians and policy wonks took such contradictory positions about the state of the cold war; frustrated by their refusal to change their minds in the face of contradictory evidence; and frustrated by the many ways in which even failed forecasts could be justified. “I was nearly right but fortunately it was Gorbachev rather than some neo-Stalinist who took over the reins.” “I made the right mistake: far more dangerous to underestimate the Soviet threat than overestimate it.” Or, of course, the get-out for all failed stock market forecasts, “Only my timing was wrong.”

Tetlock’s response was patient, painstaking and quietly brilliant. He began to collect forecasts from almost 300 experts, eventually accumulating 27,500. The main focus was on politics and geopolitics, with a selection of questions from other areas such as economics thrown in. Tetlock sought clearly defined questions, enabling him with the benefit of hindsight to pronounce each forecast right or wrong. Then Tetlock simply waited while the results rolled in – for 18 years.

Tetlock published his conclusions in 2005, in a subtle and scholarly book, Expert Political Judgment. He found that his experts were terrible forecasters. This was true in both the simple sense that the forecasts failed to materialise and in the deeper sense that the experts had little idea of how confident they should be in making forecasts in different contexts. It is easier to make forecasts about the territorial integrity of Canada than about the territorial integrity of Syria but, beyond the most obvious cases, the experts Tetlock consulted failed to distinguish the Canadas from the Syrias.

Adding to the appeal of this tale of expert hubris, Tetlock found that the most famous experts fared somewhat worse than those outside the media spotlight. Other than that, the humiliation was evenly distributed. Regardless of political ideology, profession and academic training, experts failed to see into the future.

Most people, hearing about Tetlock’s research, simply conclude that either the world is too complex to forecast, or that experts are too stupid to forecast it, or both. Tetlock himself refused to embrace cynicism so easily. He wanted to leave open the possibility that even for these intractable human questions of macroeconomics and geopolitics, a forecasting approach might exist that would bear fruit.

…

In 2013, on the auspicious date of April 1, I received an email from Tetlock inviting me to join what he described as “a major new research programme funded in part by Intelligence Advanced Research Projects Activity, an agency within the US intelligence community.”

The core of the programme, which had been running since 2011, was a collection of quantifiable forecasts much like Tetlock’s long-running study. The forecasts would be of economic and geopolitical events, “real and pressing matters of the sort that concern the intelligence community – whether Greece will default, whether there will be a military strike on Iran, etc”. These forecasts took the form of a tournament with thousands of contestants; it is now at the start of its fourth and final annual season.

“You would simply log on to a website,” Tetlock’s email continued, “give your best judgment about matters you may be following anyway, and update that judgment if and when you feel it should be. When time passes and forecasts are judged, you could compare your results with those of others.”

I elected not to participate but 20,000 others have embraced the idea. Some could reasonably be described as having some professional standing, with experience in intelligence analysis, think-tanks or academia. Others are pure amateurs. Tetlock and two other psychologists, Don Moore and Barbara Mellers, have been running experiments with the co-operation of this army of volunteers. (Mellers and Tetlock are married.) Some were given training in how to turn knowledge about the world into a probabilistic forecast; some were assembled into teams; some were given information about other forecasts while others operated in isolation. The entire exercise was given the name of the Good Judgment Project, and the aim was to find better ways to see into the future.

The early years of the forecasting tournament have, wrote Tetlock, “already yielded exciting results”.

A first insight is that even brief training works: a 20-minute course about how to put a probability on a forecast, correcting for well-known biases, provides lasting improvements to performance. This might seem extraordinary – and the benefits were surprisingly large – but even experienced geopolitical seers tend to have expertise in a subject, such as Europe’s economies or Chinese foreign policy, rather than training in the task of forecasting itself.

A second insight is that teamwork helps. When the project assembled the most successful forecasters into teams who were able to discuss and argue, they produced better predictions.

But ultimately one might expect the same basic finding as always: that forecasting events is basically impossible. Wrong. To connoisseurs of the frailties of futurology, the results of the Good Judgment Project are quite astonishing. Forecasting is possible, and some people – call them “superforecasters”– can predict geopolitical events with an accuracy far outstripping chance. The superforecasters have been able to sustain and even improve their performance.

The cynics were too hasty: for people with the right talents or the right tactics, it is possible to see into the future after all.

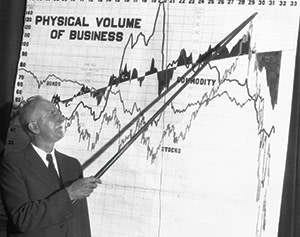

Roger Babson, Irving Fisher’s competitor, would always have claimed as much. A serial entrepreneur, Babson made his fortune selling economic forecasts alongside information about business conditions. In 1920, the Babson Statistical Organization had 12,000 subscribers and revenue of $1.35m – almost $16m in today’s money.

“After Babson, the forecaster was an instantly recognisable figure in American business,” writes Walter Friedman, the author of Fortune Tellers, a history of Babson, Fisher and other early economic forecasters. Babson certainly understood how to sell himself and his services. He advertised heavily and wrote prolifically. He gave a complimentary subscription to Thomas Edison, hoping for a celebrity endorsement. After contracting tuberculosis, Babson turned his management of the disease into an inspirational business story. He even employed stonecutters to carve inspirational slogans into large rocks in Massachusetts (the “Babson Boulders” are still there).

On September 5 1929, Babson made a speech at a business conference in Wellesley, Massachusetts. He predicted trouble: “Sooner or later a crash is coming which will take in the leading stocks and cause a decline of from 60 to 80 points in the Dow-Jones barometer.” This would have been a fall of around 20 per cent.

So famous had Babson become that his warning was briefly a self-fulfilling prophecy. When the news tickers of New York reported Babson’s comments at around 2pm, the markets erupted into what The New York Times described as “a storm of selling”. Shares lurched down by 3 per cent. This became known as the “Babson break”.

The next day, shares bounced back and Babson, for a few weeks, appeared ridiculous. On October 29, the great crash began, and within a fortnight the market had fallen almost 50 per cent. By then, Babson had an advertisement in the New York Times pointing out, reasonably, that “Babson clients were prepared”. Subway cars were decorated with the slogan, “Be Right with Babson”. For Babson, his forecasting triumph was a great opportunity to sell more subscriptions.

But his true skill was marketing, not forecasting. His key product, the “Babson chart”, looked scientific and was inspired by the discoveries of Isaac Newton, his idol. The Babson chart operated on the Newtonian assumption that any economic expansion would be matched by an equal and opposite contraction. But for all its apparent sophistication, the Babson chart offered a simple and usually contrarian message.

“Babson offered an up-arrow or a down-arrow. People loved that,” says Walter Friedman. Whether or not Babson’s forecasts were accurate was not a matter that seemed to concern many people. When he was right, he advertised the fact heavily. When he was wrong, few noticed. And Babson had indeed been wrong for many years during the long boom of the 1920s. People taking his advice would have missed out on lucrative opportunities to invest. That simply didn’t matter: his services were popular, and his most spectacularly successful prophecy was also his most famous.

Babson’s triumph suggests an important lesson: commercial success as a forecaster has little to do with whether you are any good at seeing into the future. No doubt it helped his case when his forecasts were correct but nobody gathered systematic information about how accurate he was. The Babson Statistical Organization compiled business and economic indicators that were, in all probability, of substantial value in their own right. Babson’s prognostications were the peacock’s plumage; their effect was simply to attract attention to the services his company provided.

…

When Barbara Mellers, Don Moore and Philip Tetlock established the Good Judgment Project, the basic principle was to collect specific predictions about the future and then check to see if they came true. That is not the world Roger Babson inhabited and neither does it describe the task of modern pundits.

When we talk about the future, we often aren’t talking about the future at all but about the problems of today. A newspaper columnist who offers a view on the future of North Korea, or the European Union, is trying to catch the eye, support an argument, or convey in a couple of sentences a worldview that would otherwise be impossibly unwieldy to explain. A talking head in a TV studio offers predictions by way of making conversation. A government analyst or corporate planner may be trying to justify earlier decisions, engaging in bureaucratic defensiveness. And many election forecasts are simple acts of cheerleading for one side or the other.

Unlike the predictions collected by the Good Judgment Project, many forecasts are vague enough in their details to allow the mistaken seer off the hook. Even if it was possible to pronounce that a forecast had come true or not, only in a few hotly disputed cases would anybody bother to check.

All this suggests that among the various strategies employed by the superforecasters of the Good Judgment Project, the most basic explanation of their success is that they have the single uncompromised objective of seeing into the future – and this is rare. They receive continual feedback about the success and failure of every forecast, and there are no points for radicalism, originality, boldness, conventional pieties, contrarianism or wit. The project manager of the Good Judgment Project, Terry Murray, says simply, “The only thing that matters is the right answer.”

I asked Murray for her tips on how to be a good forecaster. Her reply was, “Keep score.”

…

An intriguing footnote to Philip Tetlock’s original humbling of the experts was that the forecasters who did best were what Tetlock calls “foxes” rather than “hedgehogs”. He used the term to refer to a particular style of thinking: broad rather than deep, intuitive rather than logical, self-critical rather than assured, and ad hoc rather than systematic. The “foxy” thinking style is now much in vogue. Nate Silver, the data journalist most famous for his successful forecasts of US elections, adopted the fox as the mascot of his website as a symbol of “a pluralistic approach”.

The trouble is that Tetlock’s original foxes weren’t actually very good at forecasting. They were merely less awful than the hedgehogs, who deployed a methodical, logical train of thought that proved useless for predicting world affairs. That world, apparently, is too complex for any single logical framework to encompass.

More recent research by the Good Judgment Project investigators leaves foxes and hedgehogs behind but develops this idea that personality matters. Barbara Mellers told me that the thinking style most associated with making better forecasts was something psychologists call “actively open-minded thinking”. A questionnaire to diagnose this trait invites people to rate their agreement or disagreement with statements such as, “Changing your mind is a sign of weakness.” The project found that successful forecasters aren’t afraid to change their minds, are happy to seek out conflicting views and are comfortable with the notion that fresh evidence might force them to abandon an old view of the world and embrace something new.

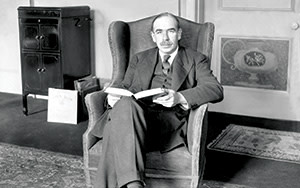

Which brings us to the strange, sad story of Irving Fisher and John Maynard Keynes. The two men had much in common: both giants in the field of economics; both best-selling authors; both, alas, enthusiastic and prominent eugenicists. Both had immense charisma as public speakers.

Fisher and Keynes also shared a fascination with financial markets, and a conviction that their expertise in macroeconomics and in economic statistics should lead to success as an investor. Both of them, ultimately, were wrong about this. The stock market crashes of 1929 – in September in the UK and late October in the US – caught each of them by surprise, and both lost heavily.

Yet Keynes is remembered today as a successful investor. This is not unreasonable. A study by David Chambers and Elroy Dimson, two financial economists, concluded that Keynes’s track record over a quarter century running the discretionary portfolio of King’s College Cambridge was excellent, outperforming market benchmarks by an average of six percentage points a year, an impressive margin.

This wasn’t because Keynes was a great economic forecaster. His original approach had been predicated on timing the business cycle, moving into and out of different investment classes depending on which way the economy itself was moving. This investment strategy was not a success, and after several years Keynes’s portfolio was almost 20 per cent behind the market as a whole.

The secret to Keynes’s eventual profits is that he changed his approach. He abandoned macroeconomic forecasting entirely. Instead, he sought out well-managed companies with strong dividend yields, and held on to them for the long term. This approach is now associated with Warren Buffett, who quotes Keynes’s investment maxims with approval. But the key insight is that the strategy does not require macroeconomic predictions. Keynes, the most influential macroeconomist in history, realised not only that such forecasts were beyond his skill but that they were unnecessary.

Irving Fisher’s mistake was not that his forecasts were any worse than Keynes’s but that he depended on them to be right, and they weren’t. Fisher’s investments were leveraged by the use of borrowed money. This magnified his gains during the boom, his confidence, and then his losses in the crash.

But there is more to Fisher’s undoing than leverage. His pre-crash gains were large enough that he could easily have cut his losses and lived comfortably. Instead, he was convinced the market would turn again. He made several comments about how the crash was “largely psychological”, or “panic”, and how recovery was imminent. It was not.

One of Fisher’s major investments was in Remington Rand – he was on the stationery company’s board after selling them his “Index Visible” invention, a type of Rolodex. The share price tells the story: $58 before the crash, $28 by 1930. Fisher topped up his investments – and the price soon dropped to $1.

Fisher became deeper and deeper in debt to the taxman and to his brokers. Towards the end of his life, he was a marginalised figure living alone in modest circumstances, an easy target for scam artists. Sylvia Nasar writes in Grand Pursuit, a history of economic thought, “His optimism, overconfidence and stubbornness betrayed him.”

…

So what is the secret of looking into the future? Initial results from the Good Judgment Project suggest the following approaches. First, some basic training in probabilistic reasoning helps to produce better forecasts. Second, teams of good forecasters produce better results than good forecasters working alone. Third, actively open-minded people prosper as forecasters.

But the Good Judgment Project also hints at why so many experts are such terrible forecasters. It’s not so much that they lack training, teamwork and open-mindedness – although some of these qualities are in shorter supply than others. It’s that most forecasters aren’t actually seriously and single-mindedly trying to see into the future. If they were, they’d keep score and try to improve their predictions based on past errors. They don’t.

This is because our predictions are about the future only in the most superficial way. They are really advertisements, conversation pieces, declarations of tribal loyalty – or, as with Irving Fisher, statements of profound conviction about the logical structure of the world. As Roger Babson explained, not without sympathy, Fisher had failed because “he thinks the world is ruled by figures instead of feelings, or by theories instead of styles”.

Poor Fisher was trapped by his own logic, his unrelenting optimism and his repeated public declarations that stocks would recover. And he was bankrupted by an investment strategy in which he could not afford to be wrong.

Babson was perhaps wrong as often as he was right – nobody was keeping track closely enough to be sure either way – but that did not stop him making a fortune. And Keynes prospered when he moved to an investment strategy in which forecasts simply did not matter much.

Fisher once declared that “the sagacious businessman is constantly forecasting”. But Keynes famously wrote of long-term forecasts, “About these matters there is no scientific basis on which to form any calculable probability whatever. We simply do not know.”

Perhaps even more famous is a remark often attributed to Keynes. “When my information changes, I alter my conclusions. What do you do, sir?”

If only he had taught that lesson to Irving Fisher.

Tim Harford’s latest book is ‘The Undercover Economist Strikes Back’

——————————————-

Evaluating forecasts

Those of us who appreciate seeing experts brought low have long been able to savour absurd prophecies – from the Decca executive who told The Beatles that “guitar bands are on the way out” to Margaret Thatcher’s assertion in 1969 that “No woman in my time will be prime minister or foreign secretary.”

Tetlock’s experimental subjects, who were promised anonymity, produced no such gems. Their forecasting failures were dry and quantitative, tracked mercilessly by a series of mathematical scoring systems. By way of illustration, consider a binary event: either Bashar al-Assad will be president of Syria on December 31 2014 or he will not. We ask an analyst for a probability that it will occur. We could, in principle, collect regular forecast updates until the year ends.

With hindsight the maximum score would have been earned by a forecaster who assigned a probability of 100 per cent to every event that occurred, and zero per cent to every event that did not. A good score would be earned by a forecaster who gave an 80 per cent probability to some events, 80 per cent of which occurred; and who gave a 20 per cent probability to other events, 20 per cent of which occurred. The dart-throwing chimpanzee of legend would give a 50 per cent probability to every binary event. The easiest way for an expert to fall short of the chimp would be to confidently predict events that did not happen.

More complex scoring rules are possible: for example, we could consider three-way events. “Italy’s gross government debt to GDP ratio for 2014 will rise above 145 per cent as estimated in the IMF’s World Economic Outlook April 2015; it will stay between 125 and 145; or it will fall below 125 per cent.” The dart-throwing chimp would give each outcome a probability of one-third. How good the chimp looks will depend crucially on where the boundaries of each case are set, of course. Tetlock did this with reference to historical data, and both chimp and experts were given the same boundaries.

——————————————-

How to be a superforecaster

Some participants in the Good Judgment Project were given advice on how to transform their knowledge about the world into a probabilistic forecast – and this training, while brief, led to a sharp improvement in forecasting performance.

The advice, a few pages in total, was summarised with the acronym CHAMP:

● Comparisons are important: use relevant comparisons as a starting point;

● Historical trends can help: look at history unless you have a strong reason to expect change;

● Average opinions: experts disagree, so find out what they think and pick a midpoint;

● Mathematical models: when model-based predictions are available, you should take them into account;

● Predictable biases exist and can be allowed for. Don’t let your hopes influence your forecasts, for example; don’t stubbornly cling to old forecasts in the face of news.

Illustration by Laurie Rollitt

Photographs: Getty; AP; Corbis

——————————————-

Letters in response to this column:

Caution is best advised on any predictions of the future / From Mr John Bishop

The forecast of JM Keynes is proven right / From Sir Peter Bazalgette

Comments